What is the difference between biological and artificial neural networks?

Psychology & Neuroscience Asked by meowthecat on April 26, 2021

I read that neural networks are of two types:

a) Biological neural networks

b) Artificial neural networks (or ANN)

I read, “Neural Networks are models of biological neural structures,” and the biological neural structure referred to the brain in the context of that text. Is the brain called a biological neural network? If not, what is the difference between the two terms?

3 Answers

I am an Statistics student at University of Warwick (incoming Stanford University) and I have an interest in explaining machine learning concepts in a non-mathematical/non-technical way.

Biological Neural Network

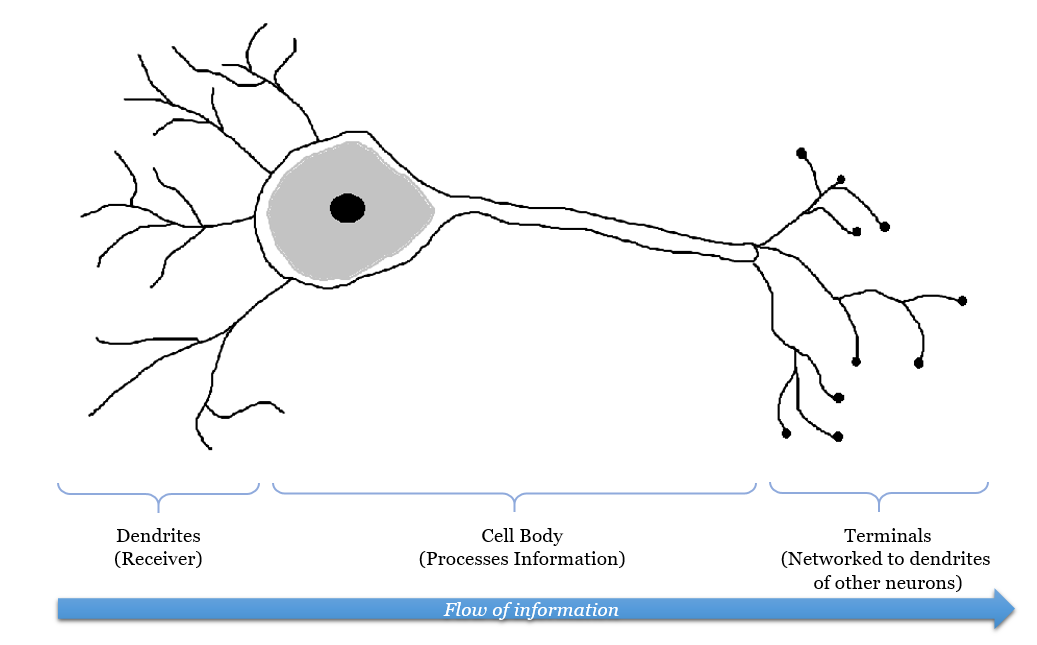

Our brain has a large network of interlinked neurons, which act as a highway for information to be transmitted from point A to point B. To send different kinds of information from A to B, the brain activates a different sets of neurons, and so essentially uses a different route to get from A to B. This is how a typical neuron might look like.

At each neuron, its dendrites receive incoming signals sent by other neurons. If the neuron receives a high enough level of signals within a certain period of time, the neuron sends an electrical pulse into the terminals. These outgoing signals are then received by other neurons.

Artificial Neural Network

The ANN model is modelled after the biological neural network (and hence its namesake). Similarly, in the ANN model, we have an input node (in this example we give it a handwritten image of the number 6), and an output node, which is the digit that the program recognized.

A simple Artificial Neural Network map, showing two scenarios with two different inputs but with the same output. Activated neurons along the path are shown in red.

The main characteristics of an ANN is as such:

Step 1. When the input node is given an image, it activates a unique set of neurons in the first layer, starting a chain reaction that would pave a unique path to the output node. In Scenario 1, neurons A, B, and D are activated in layer 1.

Step 2. The activated neurons send signals to every connected neuron in the next layer. This directly affects which neurons are activated in the next layer. In Scenario 1, neuron A sends a signal to E and G, neuron B sends a signal to E, and neuron D sends a signal to F and G.

Step 3. In the next layer, each neuron is governed by a rule on what combinations of received signals would activate the neuron (rules are trained when we give the ANN program training data, i.e. images of handwritten digits and the correct answer). In Scenario 1, neuron E is activated by the signals from A and B. However, for neuron F and G, their neurons’ rules tell them that they have not received the right signals to be activated, and hence they remains grey.

Step 4. Steps 2-3 are repeated for all the remaining layers (it is possible for the model to have more than 2 layers), until we are left with the output node.

Step 5. The output node deduces the correct digit based on signals received from neurons in the layer directly preceding it (layer 2). Each combination of activated neurons in layer 2 leads to one solution, though each solution can be represented by different combinations of activated neurons. In Scenarios 1 & 2, two images given to the input. Because the images are different, the network activates a different set of neurons to get from the input to the output. However, the output is still able to recognise that both images are “6”.

Read the full post here, which includes some examples of handwritten digits that were used: https://annalyzin.wordpress.com/2016/03/13/how-do-computers-recognise-handwriting-using-artificial-neural-networks/

Correct answer by Kenneth Soo on April 26, 2021

Artificial neural networks (ANNs) are mathematical constructs, originally designed to approximate biological neurons. Each "neuron" is a relatively simple element --- for example, summing its inputs and applying a threshold to the result, to determine the output of that "neuron".

Several decades of research went into discovering how to build network architectures using these mathematical constructs, and how to automatically set the weighting on each of the connections between the neurons to perform a wide range of tasks. For example, ANNs can do things like recognition of hand-written digits.

A "biological neural network" would refer to any group of connected biological nerve cells. Your brain is a biological neural network, so is a number of neurons grown together in a dish so that they form synaptic connections. The term "biological neural network" is not very precise; it doesn't define a particular biological structure.

In the same way, an ANN can mean any of a large number of mathematical "neuron"-like constructs, connected in any of a large number of ways, to perform any of a large number of tasks.

Answered by Dylan Richard Muir on April 26, 2021

I'm studying computer science at KIT (Karlsruhe Institute of Technology, Germany) specializing in Machine Learning and a minor in Mathematics. I am not a biologist.

An artificial neural network is basically a mathematical function. It is built from simple functions which have parameters (numbers) which get adjusted (learned). One example of such a function is:

$$varphi(x_1, dots, x_n) = frac{1}{1+e^{sum_{i=1}^n w_i x_i}} text{ with } w_i in mathbb{R}, x_i in mathbb{R}$$

As you can see, there are parameter $w_i$ which can be set to any number. The $x_i$ are the inputs. But those inputs themselves can be the output of another function $varphi$.

A common way to visualize those simple functions is

There are thousands of those simple functions (also called "neurons") which build an artificial neural network. This results in a single function which has millions of parameters in the biggest networks. Neural networks use relatively simple topologies which can often be expressed in a tree structure:

Every single circle is one neuron (also called "perceptron" in some cases).

You have lots of training data, train once a long time, then use it often. A long time often means several days.

In an artificial neural network (ANN), the signal is send synchronously.

As far as I know, the human brain isn't fully understood by now. From what I've heard, the basic building blocks are also called neurons. They look like that:

The idea is that axon terminals of one neuron, which are closer to the axon hillock of another neuron have more influence on the output of the signal. But the relationship is different. It is a process which is much better described by differential equations, not something simple as the equation for artificial neurons above. The neurons send their signals asynchronously. According to Wikipedia, humans have 86 000 000 000 neurons. They are certainly restricted in how they can be connected, but there are also certainly many billions of parameters which can be adjusted in "standard human brains".

By now, we don't know how humans really (exactly, in all details) learn. This is certainly a key difference. But we don't learn once for a long time and stop learning then. We are continuously learning very short times.

See also: On-line Recognition of Handwritten Mathematical Symbols, Chapter 4.3 for more details.

TL;DR and some more facts

- Signal transport and processing: The human brain works asynchronously, ANNs work synchronously.

- Parameter count: Humans have many billions (businessinsider.de writes "1,000 trillion") of adjustable parameters / 86Bn neurons (source). Even the most complicated ANNs only have several million learnable parameters.

- Training algorithm: ANNs use Gradient Descent for learning. Human brains use something different (but we don't know what)

- Processing speed: Single biological neurons are slow, while standard neurons in ANNs are fast. (Yes, I did not confuse the two. The artificial ones are faster. And I ignore spiking networks as I didn't see a single example where they are actually useful)

- Topology: Biological neural networks have complicated topologies, while ANNs are often in a tree structure (I am aware of recurrent networks - they are still pretty simple from their structure)

- Power consumption: Biological neural networks use very little power compared to artificial networks

- Biological networks usually don't stop / start learning. ANNs have different fitting (train) and prediction (evaluate) phases.

- Field of application: ANNs are specialized. They can only perform one task. They might be perfect at playing chess, but they fail at playing go (or vice versa). Biological neural networks can learn completely new tasks.

- ANNs need a target function. You need to tell them what is good and what is bad. I'm not sure if you can compare this to e.g. pain in biological networks.

Answered by Martin Thoma on April 26, 2021

Add your own answers!

Ask a Question

Get help from others!

Recent Answers

- Peter Machado on Why fry rice before boiling?

- Lex on Does Google Analytics track 404 page responses as valid page views?

- haakon.io on Why fry rice before boiling?

- Joshua Engel on Why fry rice before boiling?

- Jon Church on Why fry rice before boiling?

Recent Questions

- How can I transform graph image into a tikzpicture LaTeX code?

- How Do I Get The Ifruit App Off Of Gta 5 / Grand Theft Auto 5

- Iv’e designed a space elevator using a series of lasers. do you know anybody i could submit the designs too that could manufacture the concept and put it to use

- Need help finding a book. Female OP protagonist, magic

- Why is the WWF pending games (“Your turn”) area replaced w/ a column of “Bonus & Reward”gift boxes?